← Back to Projects

Āpta: Fault-tolerant object-granular CXL disaggregated memory for accelerating FaaS

► Appears in International Conference on Dependable Systems and Networks - DSN 2023

► Artifact available on github

What is Āpta?

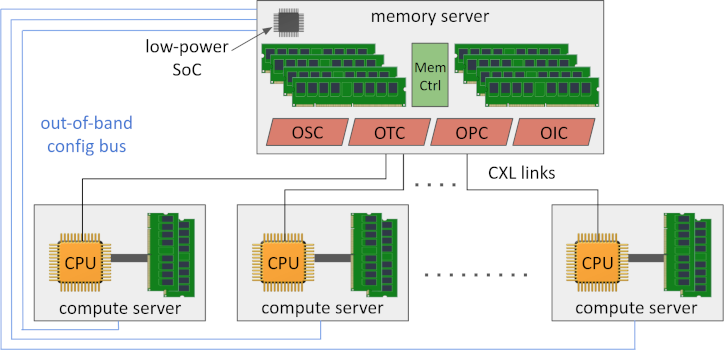

Āpta is a fault-tolerant CXL-based, shared disaggregated memory system that is specialized as an object-store to improve performance of function-as-a-service (FaaS) applications.

Features and Properties:

What does it provide?

◉ Higher performance than Amazon S3, Amazon ElastiCache and RDMA-based in-memory object store.

◉ Highest compute-server fault-tolerance, similar to as Amazon S3

◉ Strongly consistent object store and strict recovery semantics

◉ Flexible, dynamic schedulability of individual functions

◉ Lowers tail latency for function executions

How does it achieve it?

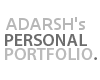

▣ Uses CXL 3.0 shared disaggregated memory to hold shared FaaS objects in a memory server

▣ Builds over the CXL.mem protocol to create object granularity read/write semantics and allow object caching in compute servers

▣ Transforms the CXL.mem coherence protocol into a highly-available one using lazy (asynchronous) invalidations and coherence-aware scheduling

▣ Architects data plane controllers on the memory and compute servers

▣ Designs the control-plane software components

Frequently asked questions (FAQs):

◈ What is the motivation for designing a new coherence protocol? What is CXL lacking?

A: There is no existing coherence protocol that satisfies strong consistency (enforcing SWMR invariant) and is fault tolerant in the presence of compute-server failures. Similarly, the latest CXL 3.0 protocol is also not completely fault-tolerant and does not define sufficient RAS capabilities to deal with compute server failures. Āpta’s novelty is a simple, straightforward transformation to the CXL.mem protocol to provide fault-tolerance, which increases the chance of it being adopted by CXL.

◈ Can these architecture design components can be adapted to broader application requirements and characteristics?

A: The architecture design components we introduce – specifically, the Object Serving Controller (OSC) and the Object Persistency Controller (OPC) – are also applicable to other applications currently being investigated for CXL. The OPC is relevant for CXL-supported persistent memory, and the OSC is relevant for address translation support required in memory capacity expansion, near memory accelerators on CXL, and dynamic tiered memory.

◈ Can the hardware components be implemented by software layers to help reduce implementation cost and improve flexibility?

A: Whereas the final Āpta design represents the most efficient hardware design, Āpta’s controllers can be realized in software at the expense of reduced performance. Our analysis already shows that:

(i) employing software layers in place of OPC+PUT and OSC+GET controllers will incur an average of 32% and 89% higher latency for get and put respectively.

(ii) forgoing object caching and coherence, thereby eliminating OTC+OIC controllers, will incur on 2%-100% higher latency for function execution time.

◈ Does Āpta increase complexity of FaaS scheduling logic due to the asynchronous invalidation scheme?

A: FaaS schedulers are complex frameworks that correctly andefficiently schedule functions on compute nodes. We see that Āpta’s scheduling objectives can easily fit into the existing stages of the Kubernetes scheduler. (Sec V-C has more details)

◈ Concerns over the use of write-through cache policy

A: Not every write is written through. The writes in the compute phase of each function are retained in the cache. Only writes to cache lines that make up a put are written through at the end of each function. Note that the put write-through policy is inevitably necessary for maintaining availability and strong consistency in the presence of compute server failures. It is worth noting that existing works [ShardStore, Faa$t] have also employed a similar policy.

◈ Complexity/cost of the custom hardware features in the solution

A baseline shared, pooled CXL DM configuration and several other proposed CXL configurations [Pond-ASPLOS23, Beacon-MICRO22, MemVerge] already require controllers for address translation, directory, invalidation and persistence. Āpta makes relatively small changes to these controllers for addingobject semantics and lazy invalidations. On the other hand, the introduced controllers arebroadly applicable to other applications currently being investigated for CXL. Specifically,the OPC is relevant for CXL-supported persistent memory [PAX-HotStorage17], and the OSC is relevant foraddress translation support required in memory capacity expansion [Pond-ASPLOS23], near memory accelerators on CXL [Beacon-MICRO22], and dynamic tiered memory [MemVerge]. So relatively minor cost for general benefit and wide applicability of the proposed hardware.

Āpta Trivia:

◈ Āpta is inspired by distributed system deployments where software often enforces consistency. We bring this insight into shared memory architecture and enable the software scheduler to enforce cache coherence and consistency.

◈ Āpta's philosophy is rooted in the holistic design approach, leveraging the time-tested "end-to-end argument". We design the hardware architecture with the full understanding of the application and middleware.

◈ The name of the project - Āpta - is derived from the Sankrit word (आप्त) which means "trustworthy" or "reliable", referring here to the enhanced reliability provided by our system.

- Computer Architecure

- CXL 3.0 Disaggregated Mem

- Memory Reliability

- CXL.mem coherence

- Function-as-a-service

- Object / key-value store

- Research Paper Paper

Slides

Talk

Source

(Github)